Latency measured is the time it takes for data to travel. Lowering latency is an essential part of building a good user experience.

Latency is the time it takes for data to pass from one point on a network to another. Suppose Server A in New York sends a data packet to Server B in London. Server A sends the packet at 04:38:00.000 GMT, and Server B receives it at 04:38:00.145 GMT. The latency on this path differs between these two times: 0.145 seconds or 145 milliseconds.

Most often, latency is measured between a user’s device (the “client” device) and a data center. This measurement helps developers understand how quickly a webpage or application will load for users.

Although data on the Internet travels at the speed of light, the effects of distance and delays caused by Internet infrastructure equipment mean that latency can never be eliminated. It can and should, however, be minimized. A high latency results in poor website performance, negatively affect SEO, and can induce users to leave the site or application altogether.

What causes Internet latency?

One of the principal causes of network latency is the distance between client devices making requests and the servers responding to those requests. If a website is hosted in a data center in Columbus, Ohio, it will receive requests fairly quickly from users in Cincinnati (about 100 miles away), likely within 5-10 milliseconds. On the other hand, requests from users in Los Angeles (about 2,200 miles away) will take longer to arrive, closer to 40-50 milliseconds.

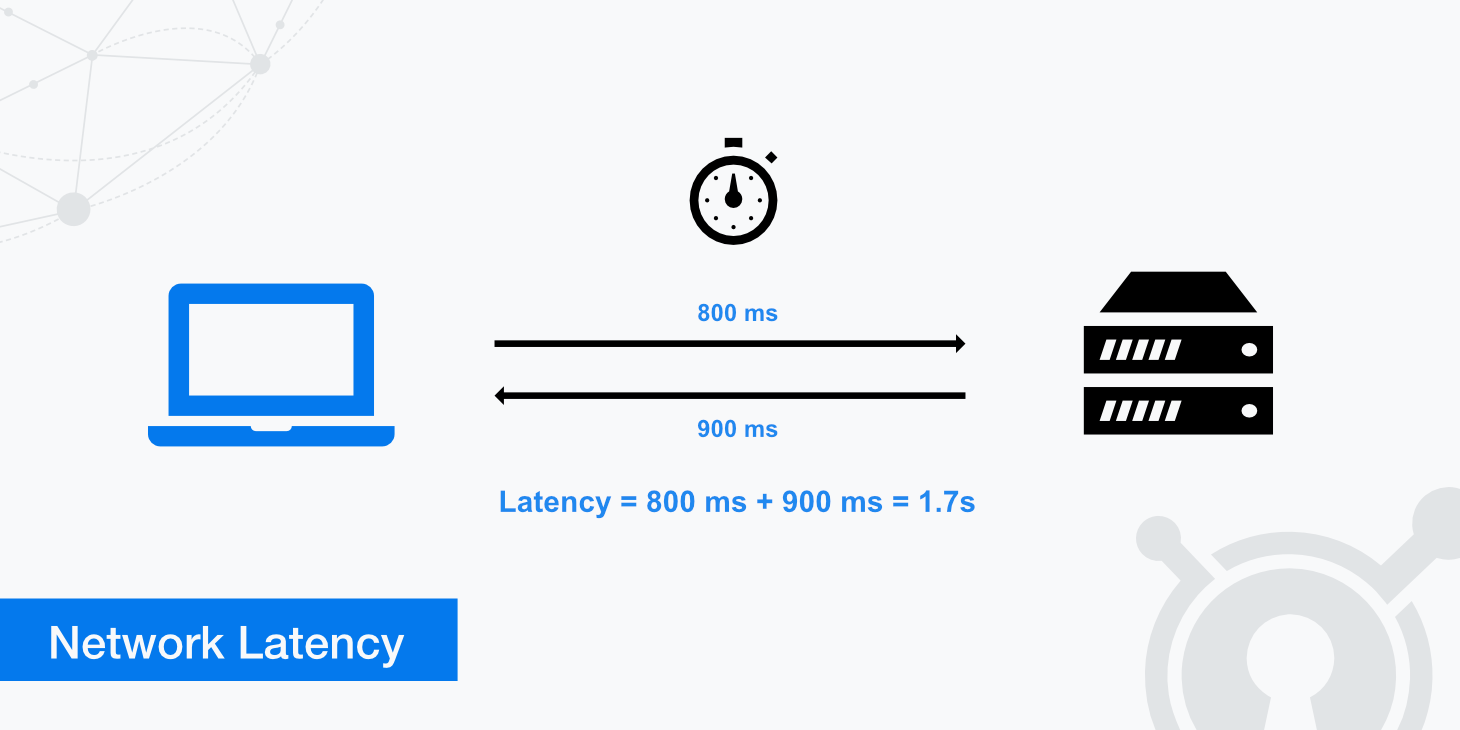

An increase of a few milliseconds may not seem like a lot, but this is compounded by all the back-and-forth communication necessary for the client and server to establish a connection, the total size and load time of the page, and any problems with the network equipment the data passes through along the way. The time it takes for a response to reach a client device after a client request is known as round trip time (RTT). RTT equals double the latency since data must travel in both directions — there and back.

Data traversing the Internet usually has to cross not just one but multiple networks. The more networks an HTTP response needs to pass through, the more opportunities there are for delays. For example, data packets cross between networks through Internet Exchange Points (IXPs). There, routers have to process and route the data packets, and at times routers may need to break them up into smaller packets, all of which add a few milliseconds to RTT.

Network latency, throughput, and bandwidth

Latency, bandwidth, and throughput are all interrelated, but they all measure different things. Bandwidth is the maximum amount of data that can pass through the network at anytime. Throughput is the average amount of data that passes over a given period. Throughput is not necessarily equivalent to bandwidth because it is affected by latency and other factors. Latency is a time measurement, not how much data is downloaded over time.

How can latency be reduced?

Using a CDN (content delivery network) is a significant step toward reducing latency. A CDN caches static content and serves it to users. CDN servers are distributed in multiple locations so that content is stored closer to end users and does not need to travel as far to reach them. This means loading a webpage will take less time, improving website speed and performance.

Other factors aside from latency can slow down performance as well. Web developers can minimize the number of render-blocking resources (loading JavaScript last, for example), optimize images for faster loading, and reduce file sizes wherever possible. Code minification is one way of reducing the size of JavaScript and CSS files.

Improving perceived page performance by strategically loading certain assets first is possible. A webpage can be configured to load the above-the-fold area of a page first so that users can interact with it even before it finishes loading (above-the-fold refers to what appears in a browser window before the user scrolls down). Webpages can also load assets only as they are needed, using a technique known as lazy loading. These approaches do not improve network latency but improve the user’s perception of page speed.

How can users fix latency on their end?

Sometimes, network “latency” (slow network performance) is caused by issues on the user’s side, not the server’s. Consumers can purchase more bandwidth if slow network performance is consistent, although bandwidth does not guarantee website performance. Switching to Ethernet instead of WiFi will result in a more consistent Internet connection and typically improves Internet speed. Users should also ensure their Internet equipment is current by regularly applying firmware updates and replacing equipment as necessary.